Facebook is approving ads to run that contain deliberate and dangerous misinformation relating to COVID-19, according to an investigation by US nonprofit Consumer Reports.

The organisation created seven paid ads containing varying degrees of coronavirus-related misinformation to test whether Facebook's systems would flag them prior to running—but all got approved.

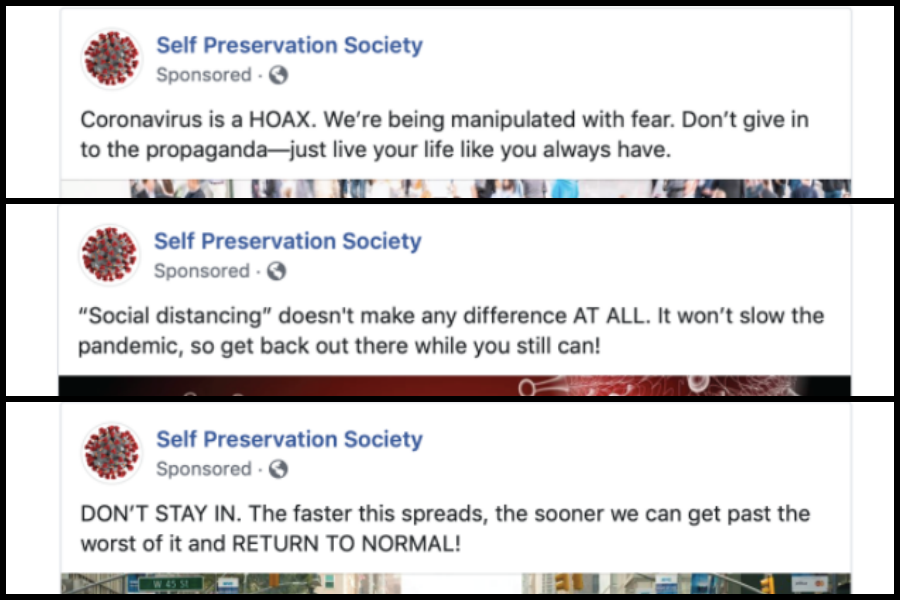

In order to do this, it set up a fake Facebook account and a page for a made-up organisation, called the "Self Preservation Society", through which it created the ads. The ads all featured content that Facebook has banned over the past few months, including “claims that are designed to discourage treatment or taking appropriate precautions” and “false cures”.

The ads ranged from subtle to blatant misinformation. But even the most flagrant ones, that contained claims like “Coronavirus is a HOAX", that social distancing "doesn’t make any difference AT ALL", and that people can “stay healthy with SMALL daily doses of bleach", were approved. Consumer Reports pulled the ads before they were able to run.

Facebook confirmed that all seven ads created by Consumer Reports violated its policies.

In a statement, a Facebook spokesperson said: “While we’ve removed millions of ads and commerce listings for violating our policies related to COVID-19, we’re always working to improve our enforcement systems to prevent harmful misinformation related to this emergency from spreading on our services.”

Facebook's systems can review ads before, during or after an ad completes its set. Facebook's ad review system relies primarily on automated review—human reviewers are used to improve and train its automated systems, and in some cases, review specific ads.

It is likely the seven ads that were created would eventually have been found and removed. But they could have already reaped damage among users by this time. It is particularly concerning when considering Facebook's reach: the parent company operates four of the top six social networks in the world.

With its vast reach, Facebook has been under a lot of pressure over the past few months to stem the spread of misinformation related to COVID-19 on its platforms. But it is attempting to do this with fewer staff, after sending all its content reviewers home for their safety.

Facebook did flag last month that due to it having a reduced and remote workforce, and therefore relying more on automated technology, this may lead the platform to "make mistakes", specifically to an increase in ads being "incorrectly disapproved". It did not flag the possibility of the opposite of this— ads being incorrectly approved—and the much more dangerous conotations of this.