Generative AI has quickly become prominent in marketing and advertising, offering unmatched efficiency, personalised experiences, and creativity. We are told these tools, capable of producing copy, visuals, and campaigns from nothing, are the future of engaging audiences at scale. Yet, as we eagerly welcome this new nirvana, it’s crucial to pause and consider a fundamental, unsettling question: Is this revolutionary technology simply a mirror of our past? And isn’t effective advertising one that surprises and delights us by offering a new and fresh perspective on where we are now?

The power of generative AI relies on its training. Large language models (LLMs) and image generators depend on extensive datasets collected from the internet, digitised books, academic papers, and other media. As any keen observer will notice, the main issue is that much of this source material, especially the content that shapes our cultural narratives, is created by a small segment of the global population. This data is heavily biased towards English-language content and is disproportionately produced by people from Western, white, and male-centric viewpoints.

This isn't a conspiracy; it's supported by history, and the figures confirm it. By 2024, nearly half of the internet’s content will be in English, despite only a small proportion of the world’s population being native English speakers.

In the creative industries shaping our culture, the disparity is even greater.

Approximately 81% of published authors are white.

In the music industry, men account for over 61% of artists, while holding a staggering 94% of producer credits.

A landmark study found that white men make up 75.7% of artists in major U.S. Museum collections.

In film, only a tiny 2% of best director nominees in the history of the Academy Awards have been women.

When an LLM learns to write an article or create a visual campaign, it essentially learns from this biased corpus. It is statistically more likely to associate certain roles with men, certain appearances with specific ethnicities, and certain products with a narrow demographic. One of my colleagues describes this more simply: AI is walking backwards into the future, as it can only assess historical data to make projections.

In marketing, we must remember our goal is to connect with a diverse and global audience; in this respect, AI can present a serious and potentially harmful challenge for brands.

Consider the consequences. A generative AI tool tasked with creating an ad for a household item might automatically produce an image of a white, middle-class family living in a suburban area. Or it might generate ad copy for a tech product that uses gendered language. These aren’t random; they mirror the patterns the model has learned. Instead of making genuine progress and embracing the true diversity of society, we risk reinforcing outdated stereotypes and promoting a narrow, unrepresentative view of the community. Rather than opening up new opportunities and reaching untapped markets, we could end up alienating large parts of the population who don’t see themselves reflected in our advertising.

Some will argue that these models can be ‘debiased’ through careful prompt engineering or fine-tuning with more representative datasets. While these methods are necessary, they often serve as post-production fixes rather than addressing the root cause of the problem. They are like applying a fresh coat of paint on a building with a cracked foundation.

The existing underlying bias remains and can re-emerge in subtle, unexpected ways. As one study on AI bias in healthcare found, even with efforts to debias, AI-generated images of doctors still primarily depict male and white professionals, reflecting existing workforce statistics and maintaining a skewed perception.

The genuine opportunity for marketing pros isn't just accepting what AI generates but actively using these tools to break out of the echo chamber. We should view generative AI as a partner, not a replacement. This means being the human element—checking for biases and deliberately prompting the models to be more inclusive. It also involves curating and refining models using intentionally diverse datasets that reflect a global society. We need to go beyond relying only on historical data and focus on creating new, fairer sources of information for these systems to learn from.

Ultimately, the question isn’t whether generative AI is biased. It is, and it is reflecting our existing biases back at us. The real question is whether we, as marketers and communicators, will use this powerful technology to amplify the same voices and perspectives that have always dominated, or if we will seize the opportunity to create a more vibrant, inclusive, and authentic reflection of the world in our work. To build a future where marketing genuinely speaks to everyone, we must first learn to recognise the silent biases in our machines.

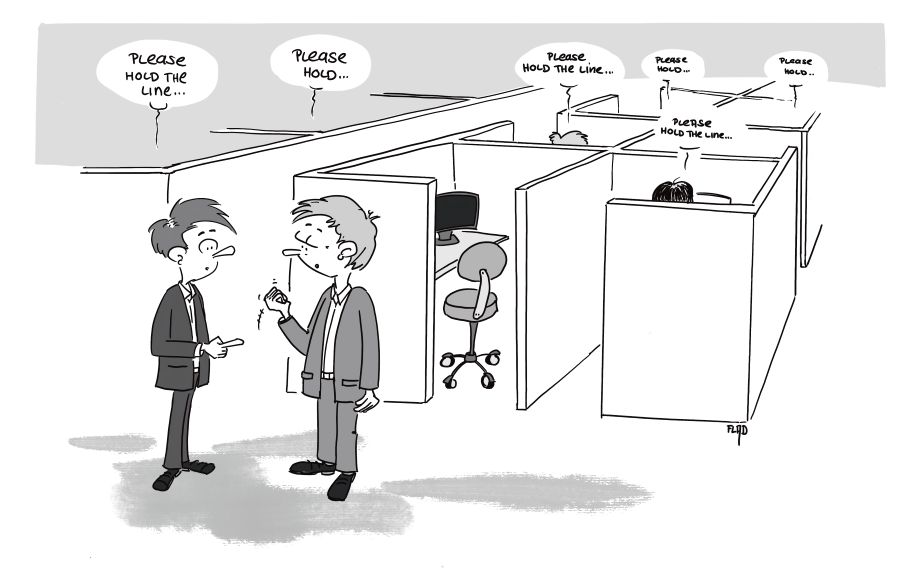

Woolley Marketing is a monthly column for Campaign Asia-Pacific, penned by Darren Woolley, the founder and global CEO of Trinity P3. The illustration accompanying this piece is by Dennis Flad, a Zurich-based marketing and advertising veteran.